A news outlet decided to use Microsoft's Bing Chat AI to write an op-ed article explaining why artificial intelligence is risky in journalism.

Based on what it wrote, Bing Chat AI is also against the use of AI tools in the news industry. Here's what it said.

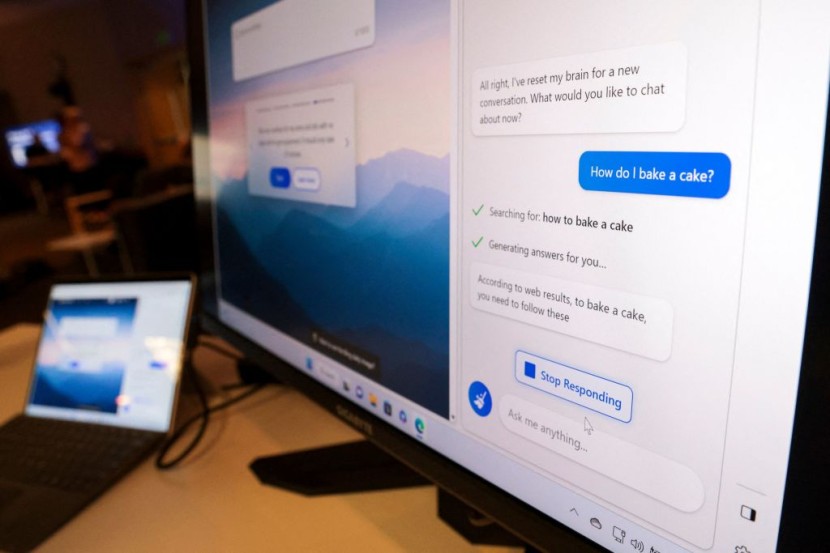

AI vs. AI? Microsoft Bing Chat AI Writes an Article About AI Risks in Journalism

St. Louis Post-Dispatch, a Missouri-based newspaper, used Microsoft's Bing Chat AI program to write an editorial article explaining why using artificial intelligence in journalism is a bad thing.

"Write a newspaper editorial arguing that artificial intelligence should not be used in journalism," said the newspaper's Editorial Board in its prompt.

After Bing Chat AI completed the article, the editorial team said that the arguments made by the bot were clear and persuasive.

They added that it is ironic that an AI chatbot is also against the use of artificial intelligence and other similar technologies in the news industry.

St. Louis Post-Dispatch said that they are hoping that their new op-ed article, which is written by an AI chatbot, can generate discussions among humans when it comes to using artificial intelligence to write news articles and other content.

Read also: Hollywood Strike Nears Conclusion After Writers Reach Tentative Deal with Studios: Reports

What Bing Chat AI Wrote

According to Fox News' latest report, Bing Chat AI argued that although artificial intelligence has numerous benefits, this technology still poses more serious risks to journalism's ethics, integrity, and quality.

"One of the main reasons why AI should not be used in journalism is that it can undermine the credibility and trustworthiness of news," explained the chatbot.

The AI program added that artificial intelligence tools can easily write fake news, spread misinformation, as well as manipulate facts.

Bing Chat AI even provided some scenarios where AIs were used to produce fake news articles. These include the 2020 incident involving the GPT-3.

The website that used OpenAI's advanced AI system clarified that it was their satire project. But, Bing Chat AI said that the articles that GPT-3 were so realistic that anyone can easily be fooled.

Aside from writing false articles, Microsoft's chatbot also warned that AIs can create deepfakes of people; altering what they did and said.

The AI chatbot added that these synthetic images and videos can be used by other people to blackmail and defame other individuals.

Of course, Bing Chat AI also talked about how AI can threaten the livelihood of real human journalists. The system explained that artificial intelligence can replace humans in many tasks.

These include writing headlines, reports, summaries, and stories. It added that AI tools can generate content faster without requiring news companies to spend lots of money.

These are just some of the warnings that Microsoft's Bing Chat AI provided. You can click here to see more.

© 2026 HNGN, All rights reserved. Do not reproduce without permission.